Evaluating A Detection Engineering Program

BY

Asante Babers

/

Jan 23, 2024

/

Detection Engineering

/

30 Min

Read

Overview

Lets review a framework for a detection engineering program evaluation. It emphasizes aligning detection goals with business objectives, using key metrics for assessment, and exploring various detection methods from signature-based to AI-driven. The approach champions continuous feedback, technology evaluations, staff training, and stakeholder engagement, all while benchmarking against industry standards and ensuring adaptability to emerging threats.

1. Program Objectives and Scope

1.1. Definition and Purpose

The foundation of any effective detection engineering program lies in its clearly defined objectives. These objectives not only serve as guiding principles but also as the yardstick against which the program's success is measured. For this framework:

The overarching goal of the program is to provide a robust and comprehensive system that can detect and respond to threats in real-time, ensuring the integrity, confidentiality, and availability of the organization's resources.

Aligning this program with the organization's security and business objectives is vital. By doing so, it ensures that the threat detection mechanisms not only protect against malicious activities but also support the organization's broader goals, be it customer trust, regulatory compliance, or business continuity.

1.2. Coverage Areas

The digital landscape of an organization is vast, and threats can originate from various sources. For a threat detection system to be effective, it needs to cast a wide net across several domains. In this framework:

Endpoints: Every device that connects to the organization's network is a potential entry point for threats. This includes computers, mobile devices, and IoT devices. The program aims to monitor these endpoints for any suspicious activities, ensuring they remain uncompromised.

Network: This encompasses the organization's entire digital communication infrastructure. By monitoring network traffic, the program can detect anomalies, unauthorized access attempts, and other potential threats.

Cloud: As organizations increasingly move to cloud-based solutions, ensuring the security of data and applications in the cloud becomes paramount. This program extends its detection capabilities to cloud environments, ensuring they are as secure as on-premise solutions.

Applications: Custom software and third-party applications can contain vulnerabilities that malicious actors exploit. This framework emphasizes monitoring application activities and interactions to detect any aberrant behavior or unauthorized access attempts.

1.3. Expected Outcomes

For the program to remain effective, there must be measurable outcomes against which its performance can be assessed. These outcomes provide insight into the program's strengths and areas that may require further enhancement.

Key Performance Indicators (KPIs) serve as the quantifiable metrics for the program. These can include metrics like the number of threats detected, response time to threats, and the accuracy of threat detection. By defining these KPIs, the organization establishes clear targets for the threat detection program to achieve.

However, defining KPIs alone isn't sufficient. The program also establishes a regular review process, ensuring that there's consistent monitoring of its performance against these KPIs. This not only helps in early identification of any deviations but also facilitates timely corrective actions, ensuring that the program remains on track to achieve its objectives.

2. Detection Metrics

2.1. Definition and Importance

Detection metrics serve as the heartbeat of any threat detection program. They are quantifiable measures that provide insights into how well the program identifies and responds to threats. Their significance cannot be overstated; without them, gauging the effectiveness and efficiency of detection capabilities would be like navigating without a compass. Properly utilized, these metrics illuminate strengths and spotlight areas needing improvement, ensuring the program continually evolves to meet emerging challenges.

2.2. Key Metrics

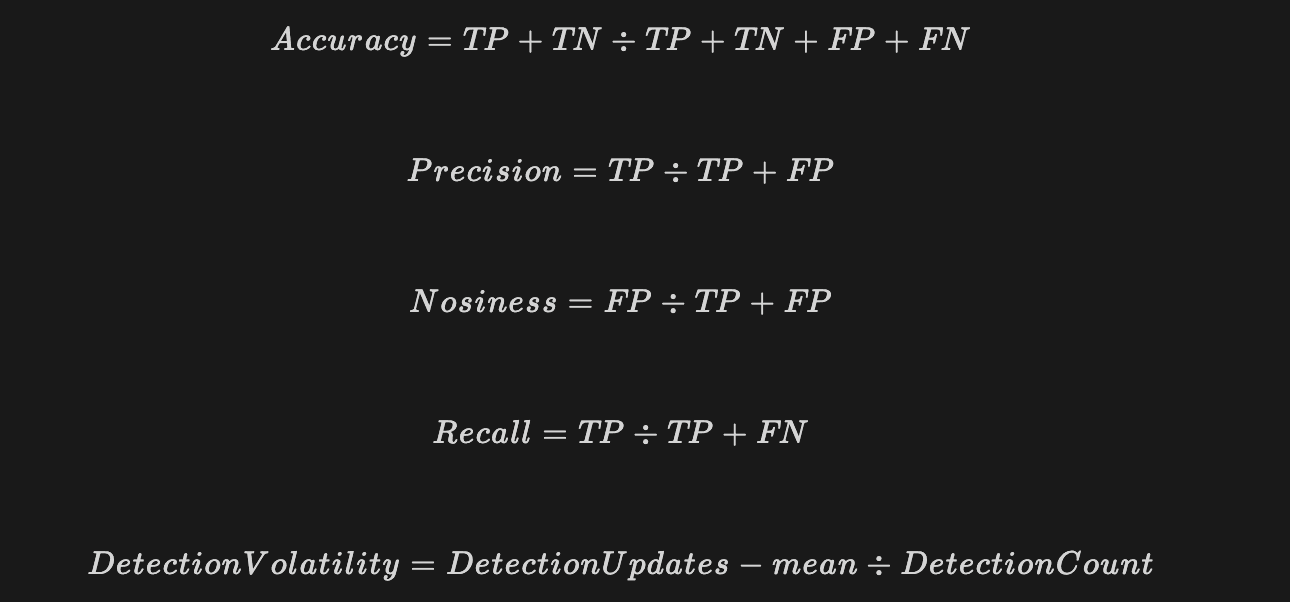

Each metric has its unique importance in painting a comprehensive picture of the program's performance:

True Positives (TP): These represent the incidents that were rightly identified as threats. A high number indicates that the system is effectively catching threats.

False Positives (FP): These are benign incidents mistakenly flagged as threats. A high FP rate can lead to wasted resources and potential desensitization to alerts.

True Negatives (TN): This metric highlights the system's ability to correctly identify non-threatening incidents, ensuring that resources aren't wasted on benign activities.

False Negatives (FN): These are genuine threats that went undetected. A high FN rate is concerning, as it indicates missed threats that could compromise the system.

Detection Rate: This represents the system's overall efficiency in identifying threats from the total incidents.

Alert Volume: This provides a snapshot of the system's alerting frequency over a designated period, aiding in resource allocation and response planning.

Detections Created: This tracks the proactive creation of new detection rules or signatures, reflecting the system's adaptability to emerging threats.

Detection Coverage: The overall coverage against TTPs usually mapped against MITRE ATT&CK.

Metrics By Criticality

2.3. Benchmarking

Benchmarking is the practice of comparing your program's metrics against industry standards or peer organizations. It offers invaluable context, allowing you to see where your program stands in the broader industry landscape. By understanding these comparisons, you can set realistic goals for improvement, ensuring that the program remains competitive and robust.

2.4. Reporting and Visualization

In today's fast-paced digital landscape, quickly understanding complex data is crucial. Visualizing metrics through dashboards and reports simplifies this task, turning raw numbers into intuitive visuals. This is not just for those knee-deep in the technical details; clear and concise reports are invaluable for presenting to a wider audience, ensuring that both technical and non-technical stakeholders grasp the program's performance.

2.5. Continuous Improvement

A static threat detection program is a vulnerable one. Using metrics as a continuous feedback mechanism ensures the program remains dynamic, adapting to ever-evolving threats. By regularly reviewing these metrics, you can fine-tune detection strategies and methodologies, ensuring the program's resilience and effectiveness over time.

3. Detection Methodology Evaluation

3.1. Signature-based Detection

Description: Predicated on known malicious patterns.

Evaluation Points:

Assess the comprehensiveness and update frequency of the signature database. How often is it updated with new threat signatures?

Examine the system's response time to newly discovered threats. How quickly can it integrate new signatures?

Gauge the system's flexibility. Can it efficiently handle a large volume of signatures without compromising performance?

3.2. Behavior-based Detection

Description: Focuses on abnormal behavior patterns.

Evaluation Points:

Investigate the system's capability to differentiate between benign unusual behaviors and malicious ones. Does it have a high false positive rate?

Examine its adaptability. Can it learn from false positives and adjust accordingly?

Assess how well it integrates with other systems. Can it share behavior-based insights with other security solutions to enhance overall security?

3.3. Heuristic-based Detection

Description: Uses characteristics or patterns.

Evaluation Points:

Evaluate the depth and breadth of its heuristic rules. Are they comprehensive enough to detect a wide range of threats?

Monitor its adaptability. Can it adjust heuristics based on evolving threats and the organization's specific needs?

Gauge its efficiency. How quickly can it process information and make determinations using its heuristic rules?

3.4. Anomaly-based Detection

Description: Identifies unusual patterns or behaviors.

Evaluation Points:

Assess its baseline creation process. How accurately does it establish what's considered "normal" for the system?

Examine its false positive rate. Given that anomalies can often be benign, how often does it mistakenly flag non-threatening activities?

Evaluate its adaptability. As the organization's operations evolve, can the system adjust its baseline and detection capabilities accordingly?

3.5. Machine Learning and AI-based Detection

Description: Employs algorithms to learn and improve over time.

Evaluation Points:

Investigate the quality of its training data. Is it diverse and comprehensive enough to train the system effectively?

Assess its learning rate. How quickly can it adapt to new threats or changes in the operational landscape?

Gauge its integration capabilities. Can it share insights and learnings with other systems to form a cohesive security strategy?

4. Feedback Loop and Iteration

4.1. Continuous Feedback Mechanism:

At the heart of a dynamic threat detection program is the establishment of a continuous feedback mechanism. This isn't just a one-off process but a recurring one, ensuring that the program is always in tune with its operational realities. By constantly obtaining feedback, be it from automated systems, personnel, or third-party assessments, the program can remain agile, adjusting to new threats and challenges as they arise.

4.2. Liaising with Incident Response and Threat Hunting Teams:

Collaboration is key. The Incident Response team, responsible for managing and mitigating security incidents, and the Threat Hunting team, which proactively searches for hidden threats, are invaluable sources of feedback. Regular communication with these teams offers real-world insights into the program's effectiveness. Their hands-on experiences can highlight strengths to be capitalized on and vulnerabilities to be addressed.

4.3. Refining Detection Rules and Methodologies:

Feedback, in its raw form, is just information. Its true value lies in its application. By using the feedback obtained, the program can refine its detection rules, ensuring they remain relevant and effective. This might mean tweaking an existing rule to catch a newly discovered threat or removing redundant rules that no longer serve a purpose. Similarly, methodologies—the overarching strategies and approaches to detection—can be optimized based on feedback, ensuring the program remains at the forefront of threat detection.

4.4. Tracking and Acting on False Positives and Negatives:

False positives (benign activities flagged as threats) and false negatives (missed genuine threats) can be detrimental. Not only do they drain resources, but they can also compromise security. It's imperative to have a robust system in place to track these occurrences. But tracking alone isn't sufficient; the program must also act upon this information. This could mean retraining an AI system, revising detection rules, or enhancing collaboration between teams. By addressing these inaccuracies, the program can enhance its precision and credibility.

5. Technology Stack Evaluation

5.1. Review and Audit All Detection Tools and Platforms in Use:

Importance: A regular review and audit of the tools and platforms ensures that all software is up to date, configured correctly, and performing optimally. Additionally, it identifies any redundancies or gaps in the current technology stack.

How-to:

Create an inventory of all detection tools and platforms currently deployed.

Check each tool against the latest versions available to ensure there are no outdated tools in use.

Validate the configurations of each tool to ensure they align with best practices and organizational requirements.

Identify overlaps in capabilities to eliminate redundancies and highlight any areas lacking coverage.

5.2. Assess the Integration Capability Among Tools:

Importance: Integrated tools can share data and insights, leading to a more cohesive and efficient threat detection strategy. A well-integrated technology stack offers a holistic view of the threat landscape and can respond more rapidly to incidents.

How-to:

Determine which tools have built-in integration capabilities or support integration through APIs.

Test the data flow between integrated tools to ensure seamless communication.

Monitor the performance impact of integrations, ensuring that data sharing does not lead to system lags or bottlenecks.

Seek feedback from users about the effectiveness and ease of use of integrated systems.

5.3. Evaluate the Potential for Technology Stack Growth:

Importance: As an organization grows and evolves, its threat detection needs may also expand. The technology stack must be scalable, capable of accommodating more users, more data, and potentially more sophisticated threats without compromising performance.

How-to:

Assess the current load on each tool and platform to determine if they are nearing their capacity limits.

Test the performance of tools under simulated increased loads to gauge their scalability.

Consider future organizational growth plans (e.g., new branches, more employees, expanded digital infrastructure) and evaluate if the current technology stack can support this growth.

Ensure that any new tools or platforms added to the stack in the future can integrate seamlessly with existing systems.

6. Detection Lifecycle Management

6.1. Document Processes for Each Stage of the Detection Rule Lifecycle:

Importance: Every detection rule evolves over time, from its creation to potential retirement. Documenting each stage ensures clarity, consistency, and makes it easier to train new team members. It also provides a standardized approach that can be refined over time for better efficiency.

How-to:

Identify each stage in the lifecycle of a detection rule, such as creation, testing, deployment, review, modification, and retirement.

For each stage, outline the required steps, responsibilities, tools involved, and expected outcomes.

Ensure that the documentation is accessible to all relevant stakeholders and is reviewed regularly to incorporate any changes or improvements.

6.2. Ensure Regular Audits and Updates of Rules:

Importance: The threat landscape is dynamic, and detection rules must adapt accordingly. Regular audits ensure that rules remain relevant, effective, and are not producing excessive false positives or negatives.

How-to:

Schedule periodic reviews of all detection rules. The frequency might vary based on the organization's size, industry, or perceived threat level.

Assess the effectiveness of each rule against recent threats and incidents.

Update rules to address any new threat patterns or retire rules that are no longer relevant.

Monitor for any rules that produce high false positive rates and refine them for accuracy.

6.3. Maintain a Centralized Repository for Detection Rules, Complete with Versioning and Documentation:

Importance: A centralized repository ensures that there's a single source of truth for all detection rules, making management, review, and deployment more efficient. Versioning tracks changes over time, allowing for easy rollbacks or assessments of rule effectiveness across versions.

How-to:

Choose a repository platform that supports versioning, such as a version control system.

Organize rules in a logical manner, possibly by threat type, department, or any other criteria that suits the organization.

For each rule, include comprehensive documentation detailing its purpose, creation date, modification history, and any incidents where it was crucial.

Implement access controls to ensure that only authorized personnel can make changes, while others might have view-only access.

7. Training and Skill Development

7.1. Regularly Evaluate the Competencies of the Detection Engineering Team:

Importance: The effectiveness of a detection program heavily relies on the skills and knowledge of its engineering team. Regular evaluations ensure that the team is equipped with the latest know-how and can identify areas where additional training might be necessary.

How-to:

Conduct periodic performance reviews to assess individual and team-wide competencies.

Use real-world incidents and challenges as case studies to gauge the team's response and approach.

Identify any gaps in knowledge or skills that might be affecting the team's performance.

Gather feedback from the team members about areas where they feel additional training or resources might be beneficial.

7.2. Provide Both Internal and External Training Opportunities:

Importance: Continuous learning is pivotal in the ever-evolving field of threat detection. Offering a mix of internal and external training ensures a broad spectrum of learning opportunities, from in-house knowledge sharing to external expert insights.

How-to:

Organize internal workshops where team members can share insights, experiences, and recent discoveries. This fosters a culture of collaborative learning.

Enroll team members in external seminars, webinars, or workshops relevant to threat detection. This exposes them to industry best practices and new methodologies.

Offer access to online courses or platforms where team members can pursue self-paced learning.

7.3. Encourage Certifications that Align with the Organization's Detection Goals:

Importance: Certifications not only validate an individual's expertise but also ensure that the knowledge acquired aligns with industry standards. Encouraging certifications can elevate the overall skill level of the team.

How-to:

Identify certifications that resonate with the organization's threat detection objectives. This could range from general cybersecurity certifications to those specifically tailored for threat detection.

Offer incentives, such as reimbursement for exam fees or recognition for those who achieve certifications.

Create a roadmap or progression ladder where certain roles or responsibilities require specific certifications, ensuring that team members are motivated to upskill.

8. Program Maturity Assessment

8.1. Regularly Benchmark the Program Against Industry Standards:

Importance: Comparing the threat detection program against industry benchmarks provides an objective view of its effectiveness. This comparison allows organizations to gauge where they stand and identify areas for improvement relative to industry peers.

How-to:

Identify authoritative industry standards and benchmarks relevant to threat detection.

Analyze key performance indicators and metrics of your program against these benchmarks.

Use the insights from this comparison to prioritize areas needing improvement and celebrate areas where the program excels.

8.2. Use the "Threat Detection Maturity Framework" to Assess Current Maturity and Define the Future Path:

Importance: The "Threat Detection Maturity Framework" provides a structured approach to assess the sophistication and effectiveness of a detection program. It helps in identifying the current stage of maturity and guides the progression to more advanced stages.

How-to:

Familiarize yourself with the stages and criteria of the "Threat Detection Maturity Framework".

Assess your current detection capabilities, processes, and outcomes against the framework's criteria.

Based on the assessment, determine the current maturity level of the program.

Use the framework to set clear milestones and objectives for advancing to higher maturity levels, ensuring a structured and strategic progression.

8.3. Engage External Consultants or Auditors for an Unbiased Maturity Assessment if Necessary:

Importance: While internal assessments are valuable, an external perspective can offer a fresh, unbiased view of the program's maturity. External consultants or auditors bring expertise and experience from evaluating multiple organizations, enriching the assessment with broader industry insights.

How-to:

Identify reputable consultants or audit firms specializing in threat detection maturity assessments.

Collaborate with them to define the scope and objectives of the assessment.

Allow them access to necessary data, tools, and team members to conduct a comprehensive evaluation.

Review their findings and recommendations, integrating them into the program's improvement strategy.

9. Stakeholder Engagement

9.1. Define and Maintain Clear Communication Channels with All Stakeholders:

Importance: Clear communication is the bedrock of any successful initiative. By establishing transparent channels, organizations ensure that stakeholders are always informed, aligned, and engaged, leading to better collaboration and decision-making.

Details:

Channel Identification: Depending on the stakeholder group, determine the most effective communication channels, be it regular meetings, email updates, or dedicated communication platforms.

Accessibility: Ensure that stakeholders can easily access these channels and that there are protocols for urgent communications.

Consistency: Maintain a consistent communication schedule, ensuring stakeholders are regularly updated and are never left in the dark about significant developments.

9.2. Provide Tailored Reports to Different Stakeholders, Ensuring Clarity and Relevance:

Importance: Different stakeholders have varied interests and responsibilities. Tailored reporting ensures that each stakeholder receives information that's both relevant to their role and presented in a manner that's easily understandable.

Details:

Segmentation: Identify the distinct stakeholder groups within the organization, such as executive leadership, IT teams, and frontline staff.

Customization: Design reports to address the specific needs and concerns of each group. For instance, executives might prefer high-level summaries and impact analyses, while IT teams would require detailed technical data.

Feedback Loop: Periodically seek feedback on report formats and content, ensuring they continue to meet stakeholder needs.

9.3. Regularly Gather Feedback from Stakeholders to Refine the Program:

Importance: Stakeholders, given their diverse roles and perspectives, can offer invaluable insights. Actively seeking their feedback ensures that the program remains aligned with organizational needs and benefits from a wide range of expertise.

Details:

Feedback Mechanisms: Implement mechanisms such as surveys, focus groups, or one-on-one interviews to gather stakeholder feedback.

Analysis: Systematically analyze the feedback to identify common themes, concerns, or suggestions.

Integration: Act on the feedback by integrating viable suggestions into the program's strategy and operations, ensuring it remains adaptive and responsive to organizational needs.

10. Continuous Improvement and Future Roadmap

10.1. Conduct Periodic Reviews to Identify Areas for Program Expansion or Refinement:

Importance: The cybersecurity landscape is dynamic, with new threats emerging continuously. Regular reviews ensure the program remains agile, adapting to current challenges and preempting future ones.

Details:

Schedule: Set a consistent timeline, such as quarterly or bi-annually, for comprehensive program reviews.

Multi-faceted Analysis: Examine various aspects of the program, from detection methodologies to response times, to identify both strengths and potential areas for improvement.

Actionable Insights: Convert findings from the reviews into actionable steps, ensuring that insights lead to tangible enhancements in the program.

10.2. Stay Updated with Technological Advancements and Consider Their Incorporation:

Importance: As technology evolves, so do the tools and methods cybercriminals employ. Staying abreast of technological advancements ensures the program leverages the latest tools and techniques to counter emerging threats.

Details:

Research and Development: Dedicate resources to continually research emerging technologies in the cybersecurity domain.

Pilot Programs: Before full-scale implementation, test new technologies in controlled environments to gauge their effectiveness and suitability.

Training: As new technologies are incorporated, ensure that the team receives adequate training to utilize them optimally.

10.3. Strategically Integrate Threat Intelligence for Proactive Detection:

Importance: Proactivity is key in cybersecurity. Instead of merely reacting to threats, integrating threat intelligence allows the program to anticipate and counter threats even before they manifest.

Details:

Threat Intelligence Sources: Identify reputable sources of threat intelligence, be it commercial platforms, governmental advisories, or industry forums.

Real-time Integration: Ensure that threat intelligence feeds are integrated in real-time, allowing for immediate action on emerging threats.

Analysis and Application: Continuously analyze intelligence data to derive patterns, indicators of compromise, and tactics, techniques, and procedures (TTPs) of adversaries. Apply these insights to enhance detection capabilities.